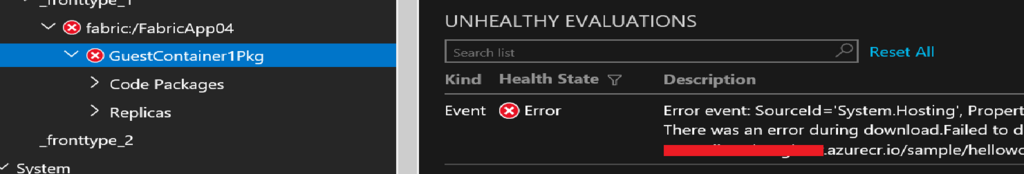

As you know, we can deploy Docker Container images into Service Fabric clusters, but you need to note when you specify "Instance Size" of Service Fabric when you use Windows Container images. You will get below error messages if you don't specify "Ev3" or "Dv3" for Azure SKUs even though you use "hyperv isolation mode".

There was an error during CodePackage activation.Container failed to start for image:"your container image".azurecr.io/"your image name":"your tag name". container 4ef6c4ec64f3006db9f5cbe9541dcb77994ccd1ac8d018180b5d08ba0cf95803 encountered an error during CreateContainer: failure in a Windows system call: No hypervisor is present on this system. (0xc0351000) extra info: {"SystemType":"Container","Name":"4ef6c4ec64f3006db9f5cbe9541dcb77994ccd1ac8d018180b5d08ba0cf95803","Owner":"docker","IsDummy":false,"IgnoreFlushesDuringBoot":true,"LayerFolderPath":"C:\\ProgramData\\docker\\windowsfilter\\4ef6c4ec64f3006db9f5cbe9541dcb77994ccd1ac8d018180b5d08ba0cf95803","Layers":[{"ID":"1fd99b8d-bc0e-5048-9a10-5eaf17053f7a","Path":"C:\\ProgramData\\docker\\windowsfilter\\b8452e48f79716c4f811a2c80d3f32d4a37c9fb98fb179c6cffce7f0beed1e66"},{"ID":"758bc832-f0e5-55a8-a2ca-8db4d04cb9bd","Path":"C:\\ProgramData\\docker\\windowsfilter\\4e98f3616f260045e987e017b3894dcfa250c7f595997110c9900b02488e05f3"},{"ID":"91a87634-6f3e-59a9-9578-33049cc2ebaa","Path":"C:\\ProgramData\\docker\\windowsfilter\\f18e514d9d6c1d8d892856392b96f939d9f992cc7395d0b2d6f05e22216ac737"},{"ID":"245ef2e6-f122-5f3b-96ed-71d43099508b","Path":"C:\\ProgramData\\docker\\windowsfilter\\715c1bdc318c7b012e2f70d3798f4e1e79a96d2fa165bac377f7540030f1a1a6"},{"ID":"22fbc57d-ea4d-552b-9b3a-87e76e093d2b","Path":"C:\\ProgramData\\docker\\windowsfilter\\80a287a21e7eccba51d5110e25517639351e87f52067a7df382edcdaf312138b"},{"ID":"a451ab3e-a3fd-5db9-8c91-7d69034cd20a","Path":"C:\\ProgramData\\docker\\windowsfilter\\94c121ca13cfde5bf579f36687d5405d0487ad972dd18ff8f598870aa3a72b73"},{"ID":"17dbc8c4-0c4a-548b-857c-5e7928cce5f1","Path":"C:\\ProgramData\\docker\\windowsfilter\\9e9fa399255bb3ba2717dcdd6ca06d493fa189c6d6a0276d0713f2a524dd3d2a"},{"ID":"20a857ef-13bb-55cf-8a67-2e18591c66bc","Path":"C:\\ProgramData\\docker\\windowsfilter\\12e7f9bda0627587e24bb4d5a3fb91c3ed9b6b6943ac1d35244afac547796dd1"},{"ID":"6d4958bb-c89f-5390-9420-5ddaff8ef0ca","Path":"C:\\ProgramData\\docker\\windowsfilter\\a4f9f68812499ffdaed2e84a6b84d8d878286f4d726b91bb7970b618f1d8dd65"},{"ID":"9067a7bd-7072-5844-8e54-f91888468462","Path":"C:\\ProgramData\\docker\\windowsfilter\\7efa7258bfec74a13dc8bbd93d4d0bc108ac6443ae0691198ff6a9a692c703f7"},{"ID":"edf15855-a46a-593c-a2c2-e476ccd00f3f","Path":"C:\\ProgramData\\docker\\windowsfilter\\38b937d97d3e4149be3671ae411441b9e839e2e0e40c5f626479357a0de8da00"},{"ID":"b4b0754d-1b7f-5413-ad1c-63616d0c72ff","Path":"C:\\ProgramData\\docker\\windowsfilter\\8753c8e07b95e6d80767dd39ace29e6e1108992ec72e46140ba990287272f418"},{"ID":"11cfb709-b618-529b-9165-d6d12db7330c","Path":"C:\\ProgramData\\docker\\windowsfilter\\d4a3ef41985c9ed1d7308f1f8603e8527154104bd7b308fed9f731f863d29314"}],"HostName":"4ef6c4ec64f3","MappedDirectories":[{"HostPath":"d:\\svcfab\\log\\containers\\sf-15-aa4017f4-24d0-437a-b7b5-a48db8717a13_3b9b217e-374e-48ea-8ee5-ccf597e47d34","ContainerPath":"c:\\sffabriclog","ReadOnly":false,"BandwidthMaximum":0,"IOPSMaximum":0},{"HostPath":"d:\\svcfab\\_fronttype_0\\fabric","ContainerPath":"c:\\sfpackageroot","ReadOnly":true,"BandwidthMaximum":0,"IOPSMaximum":0},{"HostPath":"c:\\program files\\microsoft service fabric\\bin\\fabric\\fabric.code","ContainerPath":"c:\\sffabricbin","ReadOnly":true,"BandwidthMaximum":0,"IOPSMaximum":0},{"HostPath":"d:\\svcfab\\_app\\sfwithaspnetapptype_app15","ContainerPath":"c:\\sfapplications\\sfwithaspnetapptype_app15","ReadOnly":false,"BandwidthMaximum":0,"IOPSMaximum":0}],"SandboxPath":"C:\\ProgramData\\docker\\windowsfilter","HvPartition":true,"EndpointList":["a118be34-14d8-48df-87be-2abaf0f40160"],"HvRuntime":{"ImagePath":"C:\\ProgramData\\docker\\windowsfilter\\8753c8e07b95e6d80767dd39ace29e6e1108992ec72e46140ba990287272f418\\UtilityVM"},"Servicing":false,"AllowUnqualifiedDNSQuery":true,"DNSSearchList":"SFwithASPNetApp"}![f:id:waritohutsu:20180328054928p:plain f:id:waritohutsu:20180328054928p:plain]()

Refer to Create an Azure Service Fabric container application | Microsoft Docs and watch the note. Next, confirm your ApplicationManifest.xml file in your Service Fabric project. It should be specified as "hyperv" isolation mode in attribute of "ContainerHostPolicies " tag if you have hyperv role enabled.

<ServiceManifestImport><ServiceManifestRef ServiceManifestName="GuestContainer1Pkg"ServiceManifestVersion="1.0.5" /><ConfigOverrides /><Policies><ContainerHostPolicies CodePackageRef="Code"Isolation="hyperv"><RepositoryCredentials AccountName="your account name"Password="your password"PasswordEncrypted="false"/><PortBinding ContainerPort="80"EndpointRef="GuestContainer1TypeEndpoint"/></ContainerHostPolicies></Policies></ServiceManifestImport><DefaultServices>

This error is caused by "you are running hyperV container on a machine that does not have hyperv role enabled", so you probably need to recreate your Service Fabric cluster as "Ev3" or "Dv3" instance size, because it might not be able to change SKU into different series.