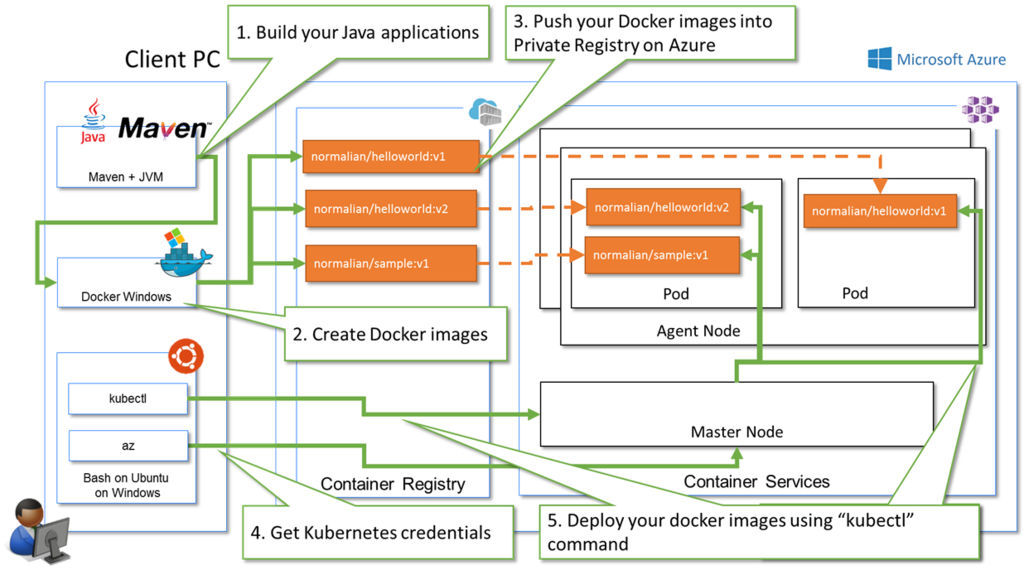

Here is a sample architecture ACS Kubernetes. People sometimes confuse components of Container Services, because there are so many components such like Java, Docker Windows, private registry, cluster and others. This architecture helps such people to understand overview of ACS Kubernetes.

ACS Kubernetes components

There are some components when you run your applications in ACS Kubernetes like below.

- Client PC

- Java Develpment toolkit - http://www.oracle.com/technetwork/java/javase/downloads/index.html

- Maven - https://maven.apache.org/install.html

- Docker Windows - https://docs.docker.com/docker-for-windows/install/

- Bash on Ubuntu on Windows - https://msdn.microsoft.com/en-us/commandline/wsl/install_guide

- az - https://docs.microsoft.com/en-us/cli/azure/install-azure-cli

- kubectl - Read "How to install kubectl into your client machine on "Bash on Ubuntu on Windows"" section in this post

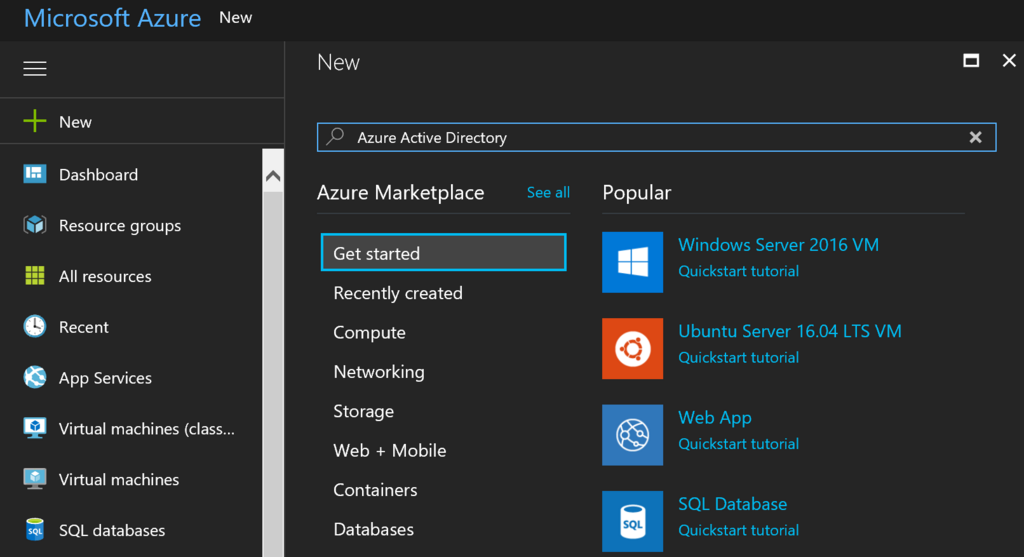

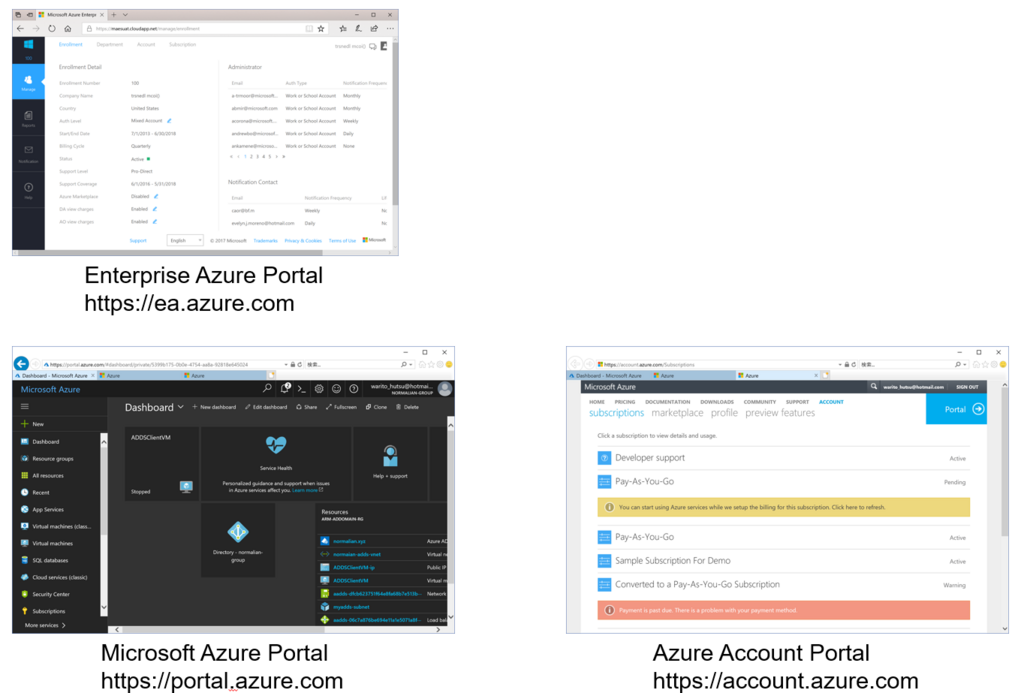

- Microsoft Azure

Steps to run your Java applications using ACS Kubernetes

Follow below steps to run your Java applications.

- Build your Java applications

- Create Docker images

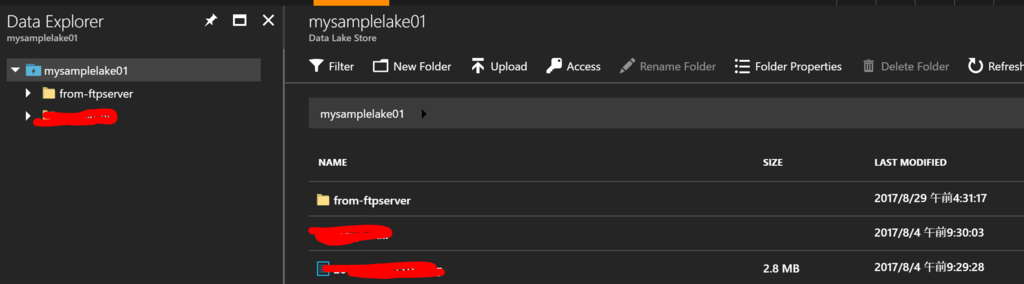

- Push your Docker images into Private Registry on Azure

- Get Kubernetes credentials

- Deploy your docker images using “kubectl” command

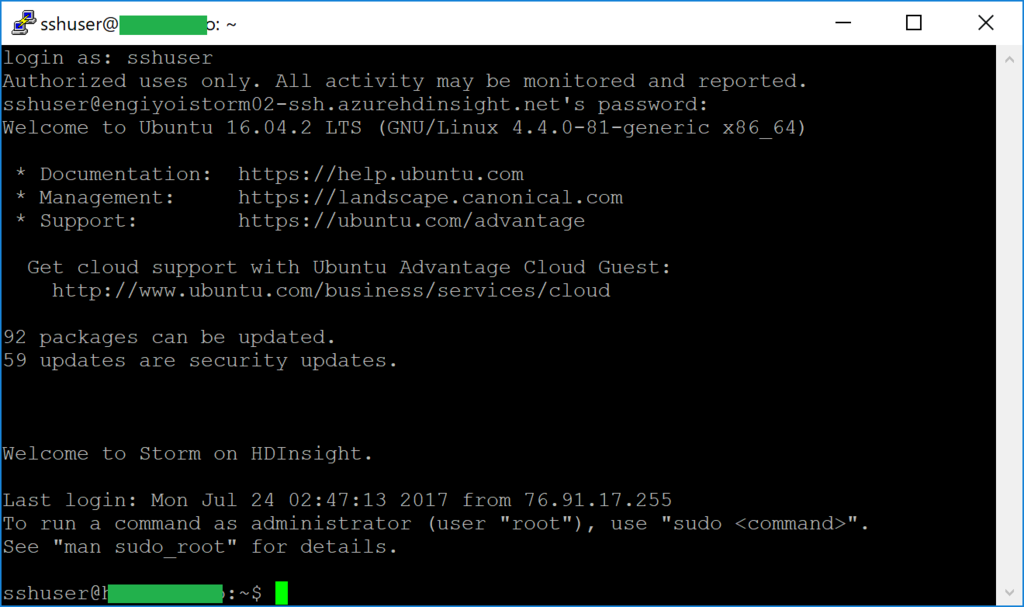

How to install kubectl into your client machine on "Bash on Ubuntu on Windows"

Run below commands.

normalian@DESKTOP-QJCCAGL:~$ echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ wheezy main" | sudo tee /etc/apt/sources.list.d/azure-cli.list [sudo] password for normalian: deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ wheezy main normalian@DESKTOP-QJCCAGL:~$ sudo apt-key adv --keyserver packages.microsoft.com --recv-keys 417A0893 normalian@DESKTOP-QJCCAGL:~$ sudo apt-get install apt-transport-https normalian@DESKTOP-QJCCAGL:~$ sudo apt-get update && sudo apt-get install azure-cliExecuting: gpg --ignore-time-conflict --no-options --no-default-keyring --homedir /tmp/tmp.5tm3Sb994i --no-auto-check-trustdb --trust-model always --keyring /etc/apt/trusted.gpg --primary-keyring /etc/apt/trusted.gpg --keyserver packages.microsoft.com --recv-keys 417A0893 ... normalian@DESKTOP-QJCCAGL:~$ az Welcome to Azure CLI! --------------------- Use `az -h` to see available commands or go to https://aka.ms/cli. ... normalian@DESKTOP-QJCCAGL:~$ az login To sign in, use a web browser to open the page https://aka.ms/devicelogin and enter the code XXXXXXXXX to authenticate. ... normalian@DESKTOP-QJCCAGL:~$ az acs kubernetes install-cli Downloading client to /usr/local/bin/kubectl from https://storage.googleapis.com/kubernetes-release/release/v1.7.0/bin/linux/amd64/kubectl Connection error while attempting to download client ([Errno 13] Permission denied: '/usr/local/bin/kubectl') normalian@DESKTOP-QJCCAGL:~$ sudo az acs kubernetes install-cli Downloading client to /usr/local/bin/kubectl from https://storage.googleapis.com/kubernetes-release/release/v1.7.0/bin/linux/amd64/kubectl normalian@DESKTOP-QJCCAGL:~$ az acs kubernetes get-credentials --resource-group=<resource group name> --name=<cluster name> --ssh-key-file=<ssh key file> normalian@DESKTOP-QJCCAGL:~$ kubectl get pods NAME READY STATUS RESTARTS AGE